Scaling

FlexiCapture can process from several hundred to millions of pages per day, and support up to several thousands of operators. With guidelines from this paper, it is easy to estimate the System load in advance and select both the appropriate architecture and hardware for the servers.

The System scales up by:

- increasing the number of scanning clients, verification clients, and Processing Stations;

- increasing the power of machines for the Application, Processing, Licensing, and Database servers, and the FileStorage, using several machines for these roles.

The numbers below help to assess or select a preliminary configuration of the FlexiCapture server component.

| Number of pages processed in 24 hrs | Number of processing cores | Number of verification operators | Number of scanning operators | Configuration | |||

|---|---|---|---|---|---|---|---|

| black-and-white only | grayscale only | color only | |||||

| 20,000 | 5,000 | 1,000 | 8 | 3 | 3 | Demo | |

| 1 million | 500,000 | 300,000 | 80 | 100 | 300 | Medium | |

| 3 million | 2 million | 1 million | 120 | 500 | 1,000 | Large (Medium 10 Gb/s) | |

| Much more | xLarge (combination of ABBYY FlexiCapture installations) | ||||||

Bottleneck monitoring helps to ascertain that the hardware used is not sufficient for the desired performance and that it’s high time to scale up.

Demo is a typical configuration for demonstrations or pilot projects, not recommended for production-scale projects. All the System components are installed on a virtual machine or deployed on PC.

| Machine Role | Requirements |

|

ABBYY FlexiCapture |

1 computer: 4-core CPU, 2.4 GHz 8 GB RAM HDD:

OS: Windows 2012 or later |

MS SQL Express may be used as a database server and installed on the same machine with FlexiCapture servers. Instead of using separate FileStorage, files can be stored directly in the database. Operators and processing stations can be installed on the same machine.

Note: In commercial projects, the Processing Station should never be installed on a computer hosting FlexiCapture servers or Database server, because it hogs up all resources and server performance deteriorates.

Note: In commercial projects, the Processing Station should never be installed on a computer hosting FlexiCapture servers or Database server, because it hogs up all resources and server performance deteriorates.

Medium is a typical configuration for commercial projects, because it is scalable: each server component is installed on a dedicated machine.

The Application Server should be installed on a dedicated machine, because it employs a scaling-up approach that is different from the Database, Processing, and Licensing servers.

Note: Technically, the Application Server, Processing Server, and Licensing Server can be installed on the same computer. Server redundancy will be ensured, but the Application Server’s scalability will not.

Note: Technically, the Application Server, Processing Server, and Licensing Server can be installed on the same computer. Server redundancy will be ensured, but the Application Server’s scalability will not.

- The Application Server is a web-service in IIS; its scaling and reliability are achieved by clustering that uses Microsoft Network Load Balancing technology. All cluster nodes are peers running in active-active mode and can be switched off at any time.

- The Processing Server and Licensing Server are Windows services; their reliability is achieved by creating an active-passive cluster based on Microsoft Failover Cluster technology.

Microsoft clearly prohibits the use of these technologies together on the same computer.

If reliability is all you need, cluster the Application Server within IIS, which supports clustering by Microsoft Failover Cluster as well.

Licensing and Processing servers can be installed on the same machine.

We recommend installing the Database Server on a dedicated machine. It is very resource-consuming and if you do combine it with certain other FlexiCapture Servers, restrict its use of the CPU and RAM and locate the database files on a physically separate HDD, so as not to affect the performance of the neighboring server.

For small loads and better performance, you can use fast HDDs on the Application Server machine as a FileStorage: e.g. 15,000 RPM or faster SATA2 disks, arranged in at least RAID1 for redundancy, or RAID10 for better performance as well.

At later stages of the project, however, if the volume of pages to process increases, this configuration will likely result in a bottleneck, especially for processing grayscale or color images, and the problem is that it can’t be scaled up on the fly – it will require the System to go down and other HDDs to be attached.

Use external storages like NAS or SAN, to which the Application Server has read-write access at 1 Gb/s over LAN, SCSI, Fibre Channel, etc. This will enable a smooth scaling up of the FileStorage.

The following text contains an explanation on how to calculate the required performance of the FileStorage hardware.

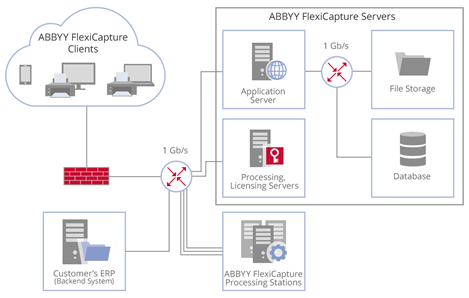

A typical FlexiCapture network configuration in an enterprise environment:

Note that it is recommended to connect the Application Server directly to the FileStorage and Database Server for fast and reliable communication.

| Machine Role | Requirements |

| Application Server |

CPU: 8 physical cores, 2.4 GHz or faster 16 GB RAM HDD: 100 GB 2 NICs, 1 Gb/s:

FileStorage: If SAN is used, connect it using SCSI, Fibre Channel or InfiniBand. OS: Windows 2012 or later |

|

A web-service and the hub of all FlexiCapture communications, the Application Server is responsible for both:

Critical resources are:

To make the most out of the CPU, for the FlexiCapture Web Services Application pool, take twice as many IIS Worker Processes, as the number of physical cores. E.g. 16 IIS Worker Processes for an 8-core processor.

If any of these resources causes a bottleneck, scale-up the Application Server:

In any case, all machines with the Application Server role should be equally connected to the same Database and FileStorage. |

|

| Processing Server, Licensing Server |

4-core CPU, 2.4 GHz or faster 8 GB RAM HDD: 100 GB NIC 1 GB/s for connecting to LAN OS: Windows 2012 or later |

|

A stable network connection is essential for the servers. Otherwise, document processing will stop. To ensure redundancy, use Microsoft Failover Cluster. See detailed instructions in FlexiCapture System Administrator’s Guide. The Licensing Server handles copies of licenses for all concurrent clients in its memory. Please keep this in mind if you are going to use a large number of scanning and verification operators simultaneously. We also recommend using the 64-bit version in projects involving a large number of concurrent clients. Our tests have shown that 2GB of RAM is enough to handle licenses for up to 1000 clients. Consider using more than one Licensing Server to serve more concurrent clients simultaneously. |

|

| Database Server |

For MS SQL Server: Database: MS SQL Server 2014 or higher, Standard or Enterprise Edition Hardware: CPU: 8 physical cores, 3.4 GHz or faster 16 GB RAM or more HDD: 400 GB OS: Windows 2012 or later For Oracle: Database: Oracle 12c Enterprise Edition Hardware: Oracle Exadata Database Machine X2-2, Quarter Rack |

|

ABBYY FlexiCapture supports MS SQL Server and Oracle installed on any platform. Both Database servers keep their own records on optimal settings, scaling, and fault tolerance. Recommended for the MS SQL Server:

|

|

| FileStorage |

NAS or SAN, connected via LAN, SCSI, Fibre Channel, or InfiniBand Read-write speed: 100 MB/s* Capacity: 5 TB* |

|

*Read-write and capacity requirements greatly depend on these 2 factors: 1. Average and peak pages processed per day (i.e. 24 hours) and per hour, and their color mode. As mentioned in the Performance Metrics section, we can estimate input flow in bytes per second if we take some typical file sizes for pages scanned in color, grayscale, and black-and-white. Images make up the majority of data transferred within the System. By analyzing the processing workflow, let’s define the 2 values:

The read-write speed requirements can be calculated as follows:

Example. A customer needs to process 10,000 grayscale pages per hour. The processing workflow includes 3 stages.

Input flow = 10,000 grayscale page images/hour = 2.8 grayscale images/s = 8.4 MB/s. Required write speed = 1 x 8.4 MB/s = 8.4 MB/s. Required read speed = 3 x 8.4 MB/s = 25.2 MB/s. To benchmark the performance of the hard disk you may use a CrystalDiskMark tool, distributed under MIT license. 2. The amount of time that documents are stored in the System. Example. A customer needs to process 100,000 grayscale images in 24 hours. Under the Service-Level Agreement, processing time is 2 days per document. Processed documents are stored for 2 weeks because of the additional checks in the customer’s ERP system; in the event of any discrepancies, documents are edited in FlexiCapture and uploaded to the ERP system again. Thus, images are to be stored for 2+14 = 16 days, and the System will accumulate 16 x 100,000 grayscale images x 3 MB (average file size for A4 grayscale image) = 4.8 TB of data.

|

|

Large configuration is required when you deal with a significant volume (more than 300,000) of color pages. We declare its range is up to 3 million black-and-white pages or up to 1 million color pages in 24 hours.

All that is mentioned above about the Medium configuration remains valid for the Large configuration. The difference here is that you have to follow all optimization recommendations and pay special attention to each part of the system – to calculate the load and to choose hardware that is sufficiently powerful, yet not too expensive. Among other things, test the Internet connection and the backend connector to ensure they can operate at the desired performance level.

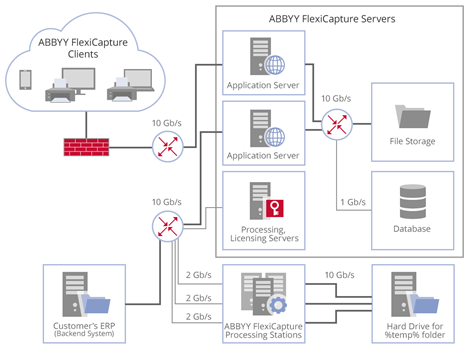

From the very beginning, consider using a 10 Gb/s network and a powerful FileStorage. Possible network architecture for the Large configuration is shown below.

Instead of providing typical system requirements for Large configurations, we recommend looking at the configurations that were tested, and their performance, as provided in this document.

To achieve even better performance, combine several independent FlexiCapture installations under one Administration and Monitoring point – referred to as the xLarge configuration – which is beyond the scope of this document.

12.04.2024 18:16:02